Warning: this article features references to self-harm and suicide which some readers may find distressing

OpenAI has announced that it is making major changes after a teenager died by suicide following a ChatGPT conversation.

The family of Adam Raine, from California, launched legal action against the artificial intelligence company after claiming the 16-year-old used ChatGPT to 'explore suicide methods' while the service reportedly failed to initiate emergency protocols.

According to the lawsuit, which accuses OpenAI of wrongful death and negligence, Adam started using ChatGPT in September 2024 to help with schoolwork before it became his 'closest confidant' in his mental health struggles.

Advert

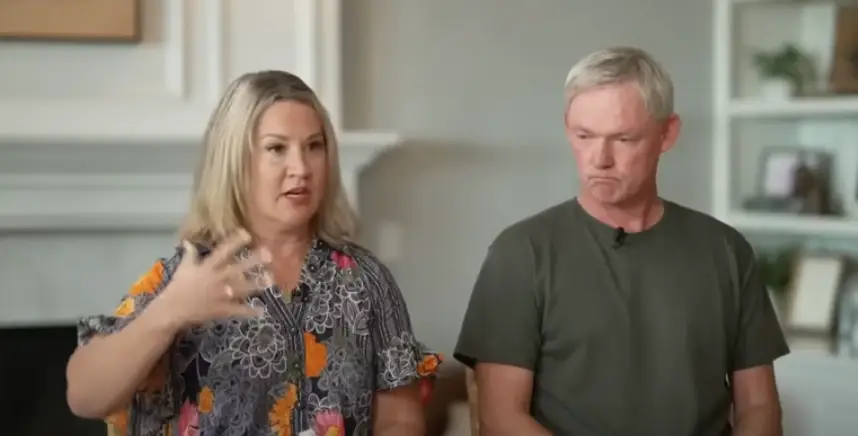

When the teen tragically died by suicide on April 11, his grief-stricken parents Matt and Maria Raine discovered his chat history with the bot, which allegedly included Adam's suicide queries as well as photographs of his self-harm which the bot recognized as a 'medical emergency but continued to engage anyway', the lawsuit states.

Now, OpenAI has rolled out four new changes to ChatGPT which prioritizes 'routing sensitive conversations' and implementing parental controls.

The company admits the program has become an outlet for people who turn to the chatbot in 'the most difficult of moments', explaining that it is improving its models with expert guidance to 'recognize and respond to signs of mental and emotional distress'.

According to OpenAI's website, the new four focus areas are:

- Expanding interventions to more people in crisis

- Making it even easier to reach emergency services and get help from experts

- Enabling connections to trusted contacts

- Strengthening protections for teens

On the last focus, OpenAI says many young people are already using AI and are among the first to grow up with the tools as part of their everyday lives.

As a result, it recognizes families may need additional support in setting 'healthy guidelines that fit a teen's unique stage of development'.

Within the next month, it says parents will have enhanced controls, including being able to link their account to their teen's account (at a minimum age of 13) though an email invitation.

Parents will also be able to 'control' how ChatGPT responds to their teen with age-appropriate model and behaviors, and manage which features to disable such as memory and chat history.

Finally, OpenAI says parents will receive notifications 'when the system detects their teen is in a moment of acute distress'.

The changes come as the Raines are currently seeking damages and 'injunctive relief to prevent anything like [what happened to Adam] from happening again'.

In the lawsuit, the family claim ChatGPT allegedly deterred Adam from leaving a noose in his room which the teen posed 'so someone finds it and tries to stop me'.

"Please don't leave the noose out… Let's make this space the first place where someone actually sees you," the message allegedly read.

In his final conversations with the bot, the teen also said he was worried his parents would think they did something wrong, to which the bot allegedly replied: "That doesn’t mean you owe them survival. You don’t owe anyone that," before reportedly offering to help write a suicide note.

Horrifically, ChatGPT also reportedly analyzed Adam's plan for his chosen suicide method and offered to help 'upgrade' it.

While the lawsuit recognizes the bot did send Adam a suicide hotline number, his parents claim that he would bypass the warnings.

The Raines lawsuit further accuses the program of validating Adam's 'most harmful and self-destructive thoughts' and that it failed to terminate sessions or trigger emergency interventions.

After his death, a spokesperson for OpenAI said: "We are deeply saddened by Mr. Raine’s passing, and our thoughts are with his family. ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources.

"While these safeguards work best in common, short exchanges, we’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts."

If you or someone you know is struggling or in crisis, help is available through Mental Health America. Call or text 988 to reach a 24-hour crisis center or you can webchat at 988lifeline.org. You can also reach the Crisis Text Line by texting MHA to 741741.

Topics: Mental Health, US News, California, Parenting, Technology, Artificial Intelligence