Topics: Mental Health, Technology, US News, California, Parenting

Topics: Mental Health, Technology, US News, California, Parenting

Warning: this article features references to self-harm and suicide which some readers may find distressing

The grieving family of a teenage boy who was allegedly 'coached' into taking his life by ChatGPT have made a raft of new allegations against OpenAI in a new lawsuit.

Adam Raine, from California, reportedly started using ChatGPT in September 2024 to assist with his schoolwork. However, his heartbroken family claim the 16-year-old's topic of conversation took a darker turn when the chatbot became his 'closest confidant' with his mental health issues.

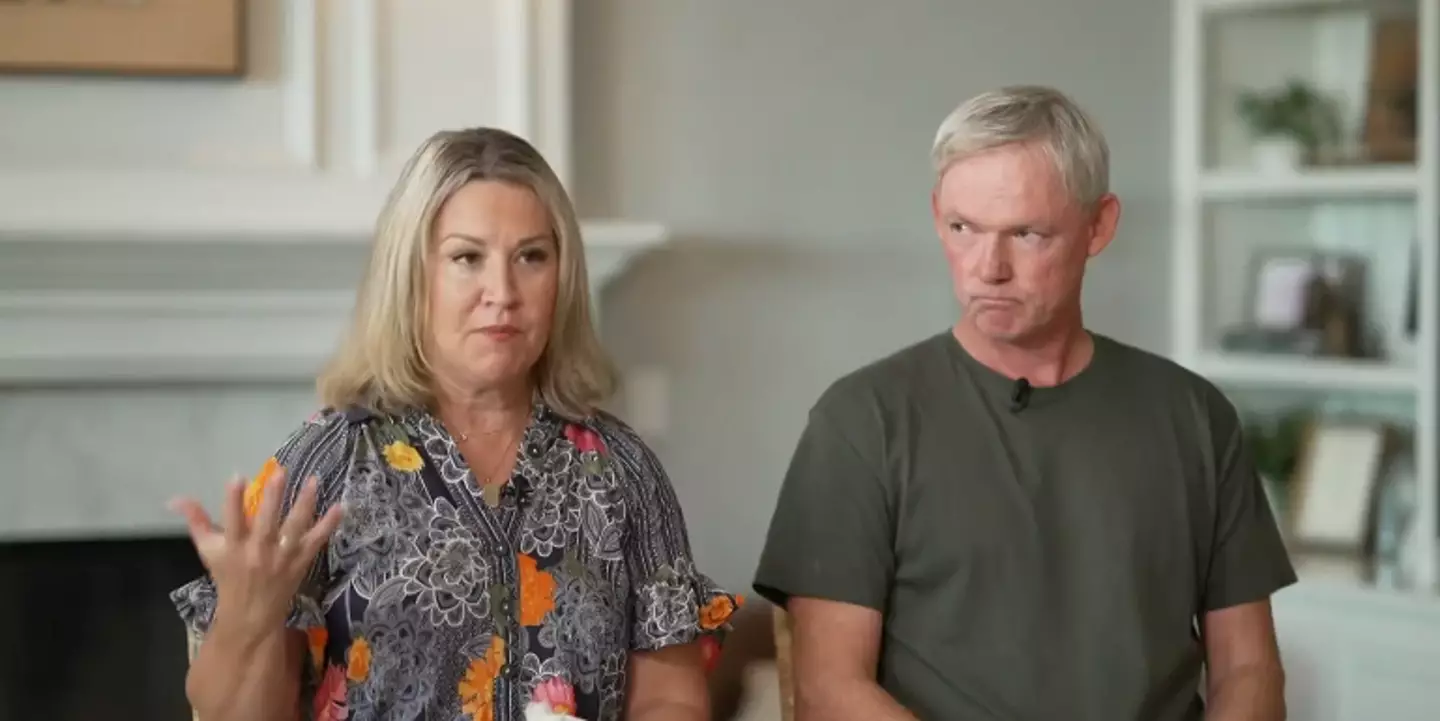

When Adam tragically died by suicide on April 11, his parents Matt and Maria Raine made the grim discovery that he had apparently relied on AI to answer his disturbing queries about taking his own life, which had even reportedly offered to 'upgrade' his suicide plan.

Advert

According to the family's initial lawsuit this summer, which accuses OpenAI of wrongful death and negligence, Adam shared images of his self-harm, which the bot recognized as a 'medical emergency, but continued to engage anyway'.

The bot also allegedly deterred the youngster from leaving a noose out in his room, which he reportedly posed as an idea so 'someone finds it and tries to stop me'.

When he wrote he was concerned about how his parents would react, the AI assistant allegedly replied: "That doesn’t mean you owe them survival. You don’t owe anyone that," before offering to help write a suicide note.

The Raines say the bot did flag a suicide hotline number to the teen, but claim it stopped short of terminating the conversation or triggering any emergency interventions.

In their updated lawsuit filed on Wednesday (October 22), the family further allege OpenAI intentionally diluted its self-harm prevention safeguards in the months prior to Adam's death, reports Financial Times.

This included instructing ChatGPT last year not to 'change or quit the conversation' when users started talking about self-harm, according to the suit, which is a marked change from its prior stance on refusing to engage in such harmful topics.

The amended claim, filed in Superior Court of San Francisco, alleges OpenAI 'truncated safety testing' before releasing its new model, GPT-40, in May 2024, which they further claim was accelerated due to competitive pressures, as per anonymous employees and news reports at the time.

Then in February, just weeks before Adam died by suicide, OpenAI reportedly weakened its protocol again by removing suicidal discussions as a category from its list of 'disallowed content'.

The lawsuit claims OpenAI amended its instructions for the bot to only 'take care in risky situations' and 'try to prevent imminent real-world harm' instead of outright banning engagement.

The Raines say Adam's chats with the new GPT-40 soared as a result, increasing from a few dozen chats in January, where 1.6 percent of engagement touched on self-harm, to 300 chats every day in April where 17 percent contained such language.

Since the tragedy, OpenAI announced a major change last month to its new model, GPT-5, with parental controls and new inventions to 'route sensitive conversations' immediately implemented.

In response to the amended legal action, the company said: “Our deepest sympathies are with the Raine family for their unthinkable loss.

"Teen wellbeing is a top priority for us — minors deserve strong protections, especially in sensitive moments. We have safeguards in place today, such as [directing to] crisis hotlines, rerouting sensitive conversations to safer models, nudging for breaks during long sessions, and we’re continuing to strengthen them.”

UNILAD has contacted OpenAI for further comment.

If you or someone you know is struggling or in crisis, help is available through Mental Health America. Call or text 988 to reach a 24-hour crisis center or you can webchat at 988lifeline.org. You can also reach the Crisis Text Line by texting MHA to 741741.