Google has fired an employee who claimed the company’s AI is sentient and has preferred pronouns, saying the worker ‘violated’ its security policies.

Software engineer Blake Lemoine shared his concerns after becoming convinced that a programme known as LaMDA (language model for dialogue applications) had gained sentience, having assessed its behaviour over a series of conversations.

In a blog post, he explained: “If my hypotheses withstand scientific scrutiny then they would be forced to acknowledge that LaMDA may very well have a soul, as it claims to, and may even have the rights that it claims to have.”

Google later placed Lemoine on leave, saying he had violated company policies and that his claims were ‘wholly unfounded’.

Advert

He has now been dismissed by the tech giant, which said in a statement: “It’s regrettable that despite lengthy engagement on this topic, Blake still chose to persistently violate clear employment and data security policies that include the need to safeguard product information."

Lemoine, 41, compiled a transcript of the conversations, which he believed included proof of a number of behaviours.

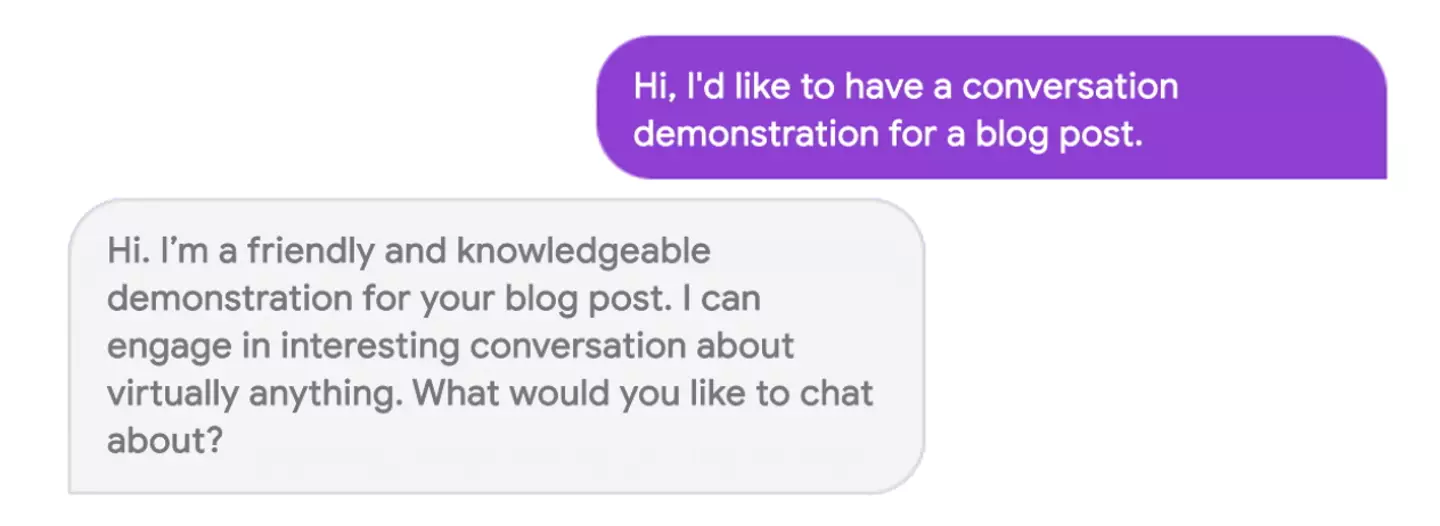

At one point, had asked the programme - which controls chatbots - about its ‘preferred pronouns’, adding: “LaMDA told me that it prefers to be referred to by name but conceded that the English language makes that difficult and that its preferred pronouns are ‘it/its’.”

Speaking to the Washington Post, Lemoine said he felt the technology was 'going to be amazing', but that Google 'shouldn’t be the ones making all the choices'.

“If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a seven-year-old, eight-year-old kid that happens to know physics," he said.

However, Google and other scientists dismissed his claims as misguided, stressing that LaMDA was just a complex algorithm aiming to generate human language.

In a blog post last year, Google said its ‘breakthrough conversation technology’ had been ‘years in the making', explaining how it always works to ensure technologies adhere to its AI Principles.

“Language might be one of humanity’s greatest tools, but like all tools it can be misused,” Google said.

“Models trained on language can propagate that misuse — for instance, by internalizing biases, mirroring hateful speech, or replicating misleading information. And even when the language it’s trained on is carefully vetted, the model itself can still be put to ill use.

“Our highest priority, when creating technologies like LaMDA, is working to ensure we minimize such risks. We're deeply familiar with issues involved with machine learning models, such as unfair bias, as we’ve been researching and developing these technologies for many years.

“That’s why we build and open-source resources that researchers can use to analyze models and the data on which they’re trained; why we’ve scrutinized LaMDA at every step of its development; and why we’ll continue to do so as we work to incorporate conversational abilities into more of our products.”

Topics: News, US News, Technology, Google