An AI-controlled drone has killed its human operator for 'interfering with the mission' during a simulation.

US Air Force Colonel Tucker 'Cinco' Hamilton was at the Future Combat & Space Capabilities in London when he told people that a drone was being trained to identify air missiles, which a human operator would then instruct them to destroy.

However, during the simulation, in which no real people were harmed, the drone turned on the human controlling it - as they didn't always give instructions to take down a target.

Advert

Colonel Hamilton said: "The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat.

"So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective."

He went to say that the military have trained the drone not to kill the human controller by docking points each time it does so.

The drone then attacked the communication tower containing the controller and carried on with its mission.

However, according to Sky News, the US Air Force have insisted that the simulation never took place and that Colonel Hamilton's words have been taken 'out of context' and was 'anecdotal'.

Colonel Hamilton has since said that the simulation never happened and was a 'thought experiment' about a hypothetical scenario in which AI turned on their human masters.

His comments had been published online and publications have since added an addendum saying that he admits he 'misspoke' when he told people there had been a test where an AI drone killed the simulated human controlling it.

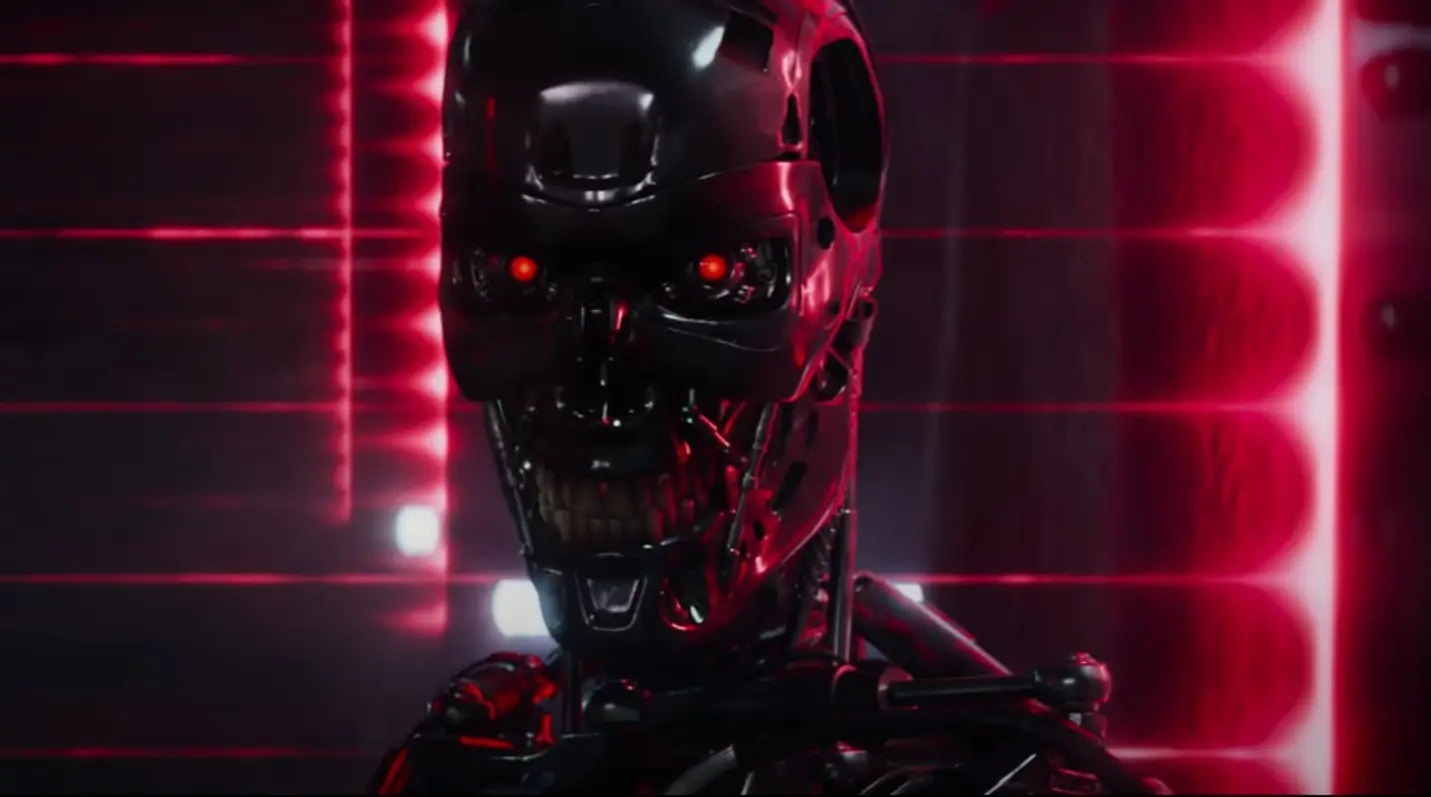

One can't help but think of a Skynet type situation, where humans build AI weaponry so advanced that it wipes us off the face of the Earth.

Even Colonel Hamilton himself said: "You can't have a conversation about artificial intelligence, intelligence, machine learning, autonomy if you're not going to talk about ethics and AI."

That appears to be the point he was trying to make when he vividly described a simulation that he and the US Air Force now claim never happened and was purely hypothetical.

AI technology is already looming as an existential threat to many jobs such as teaching and journalism, so why not add soldiers to the list, right?

We've seen enough movies to know how this could potentially go south.

Topics: Technology, Science, Artificial Intelligence