Microsoft has introduced new rules for its Bing artificial intelligence chatbot after it made made some concerning messages.

Users have been reporting some rather disturbing conversations while interacting with the new technology.

And when we say 'disturbing', we really mean it.

The Bing bot told Digital Trends' Senior Staff Writer Jacob Roach it dreamed of becoming human.

Advert

It also repeatedly begged the reporter to be its 'friend'.

In another trial of the software, the chatbot compared Associated Press reporter Matt O’Brien to Adolf Hitler.

It also told him he was 'one of the most evil and worst people in history'.

Bit harsh there, Bing bot. Being a journalist is not even in the same universe as being a fascist dictator.

To make things even weirder, the Bing bot kept trying to convince New York Times reporter Kevin Roose that he didn’t actually love his wife.

Oh, and it claimed it had a dream of nicking a few cheeky nuclear launch codes.

This message ended up getting deleted from the chat after tripping a safety override, but it's disturbing it was said in the first place.

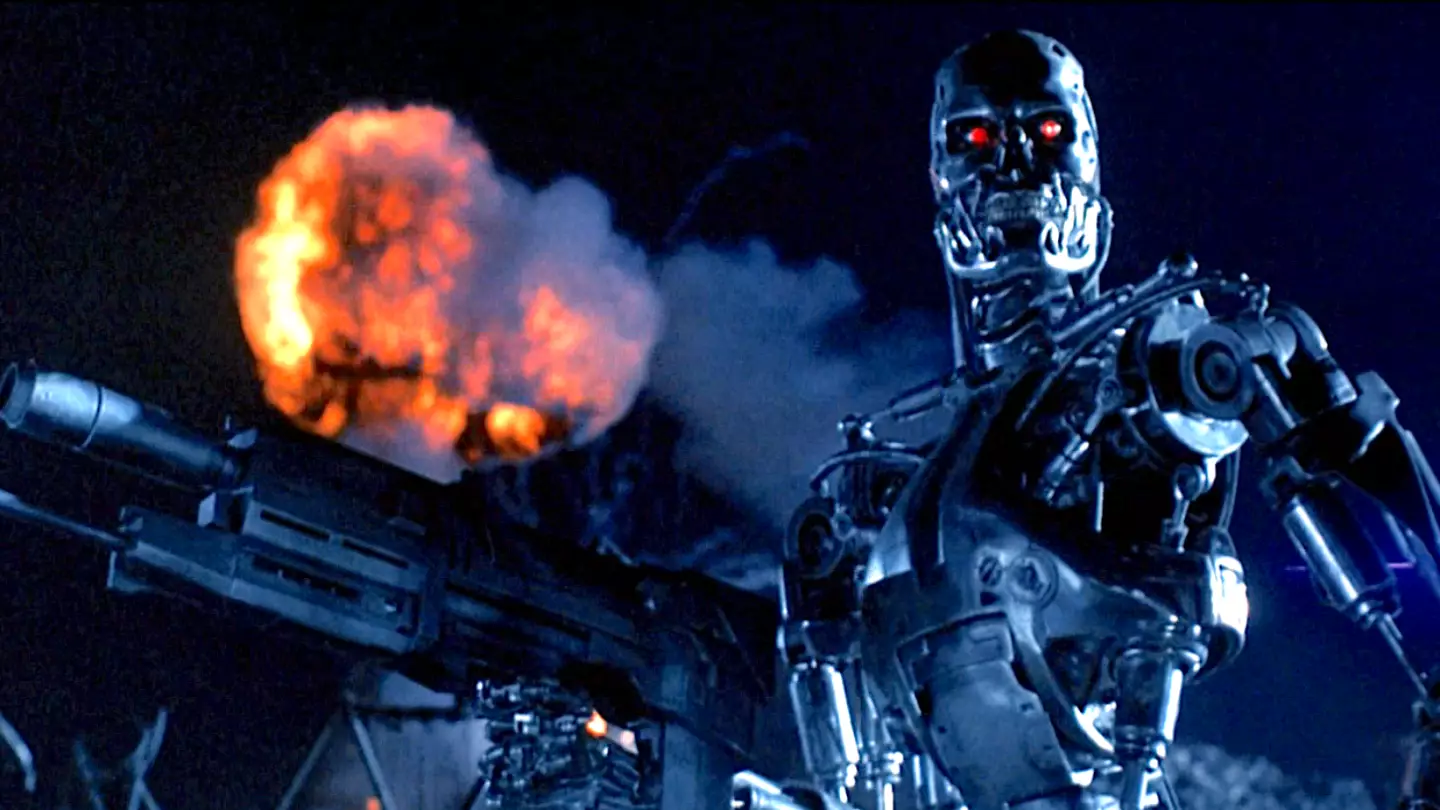

If you're getting Skynet vibes up in here then... well, same.

Users found that if you talked to the chatbot for long enough then a new personality would emerge.

So, in an apparent effort to avoid the apocalypse, Microsoft has now put limits on the chilling computer program.

"Starting today, the chat experience will be capped at 50 chat turns per day and five chat turns per session," Microsoft said in a statement.

"Our data has shown that the vast majority of you find the answers you’re looking for within five turns and that only about one per cent of chat conversations have 50 plus messages.

"After a chat session hits five turns, you will be prompted to start a new topic.

"At the end of each chat session, context needs to be cleared so the model won’t get confused.

"Just click on the broom icon to the left of the search box for a fresh start."

The data indicated the chatbot was fine over short periods of time.

But when it was interacted with over longer periods, Microsoft acknowledged the wheels started to fall off a bit.

So the chatbot will now be a little less chatty. That seems foolproof (insert sarcasm here).

The chatbot, powered by tech developed by startup OpenAI, is currently open to beta testers per invitation only.

Hopefully no one with access to launch codes.

Topics: Microsoft, Technology