What would you do if you were sent a link online and opened it up to see your own face featured in a pornographic video you'd never recorded?

For most of us, thankfully, this situation is hard to imagine.

But for Taylor Klein, it was her reality.

Taylor, who is using a pseudonym to remain anonymous, was a college student of just 22 years old when she was sent a deepfaked video of herself.

Advert

"What the f**k is happening?" Those are the words she asked herself as she watched the video, telling UNILAD: "I was incredibly shocked to see my face on a pornographic video and my mind was all over the place. I could not believe what I was seeing or why someone would do this to me."

Taylor was utterly confused. She knew about the technology behind deepfakes, but didn't actually know what a deepfake was until she received the link.

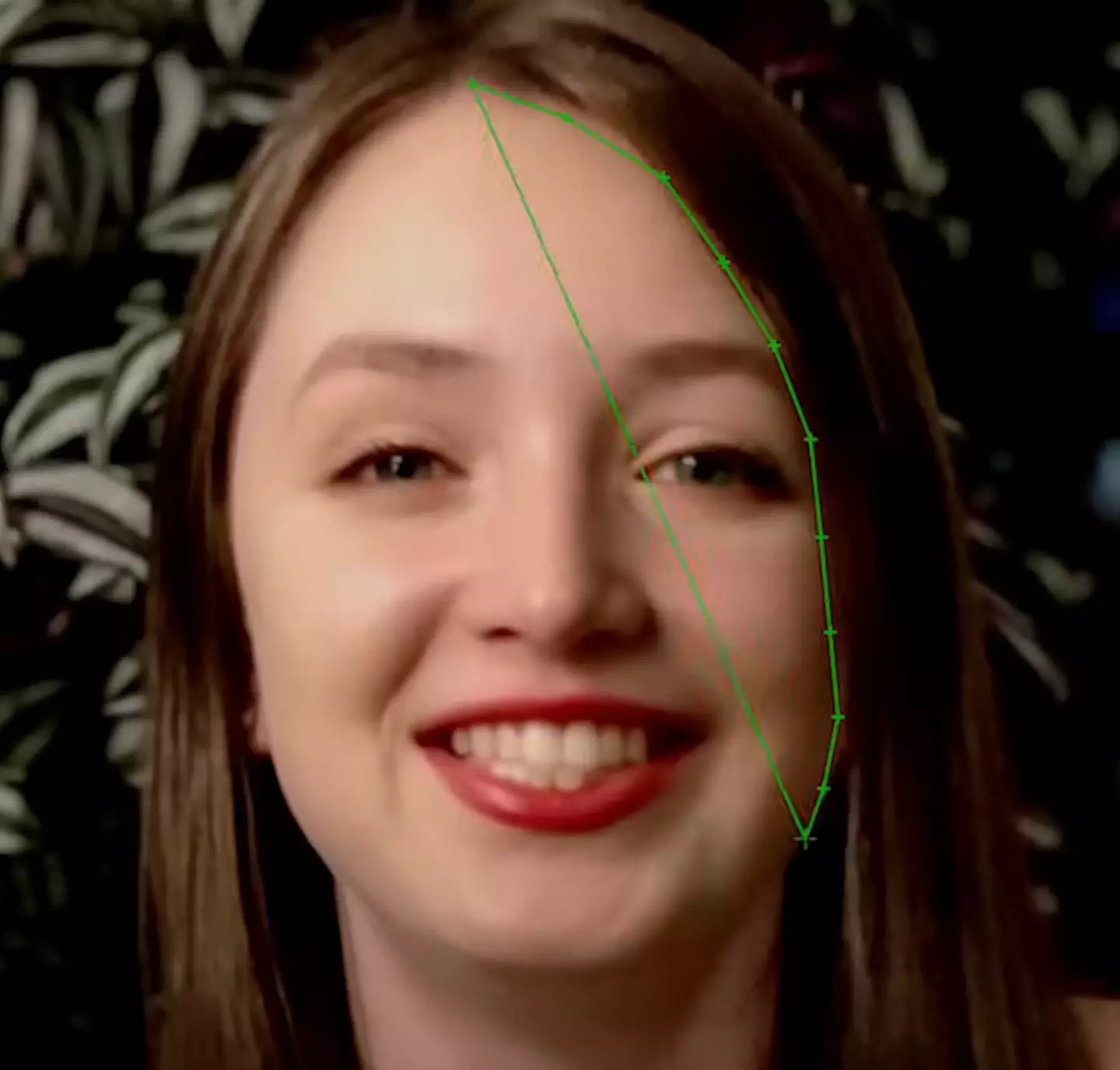

Deepfakes are digitally manipulated images which replace one person's likeness with another.

In Taylor's case, her face had been used to replace the woman who actually featured in the explicit video.

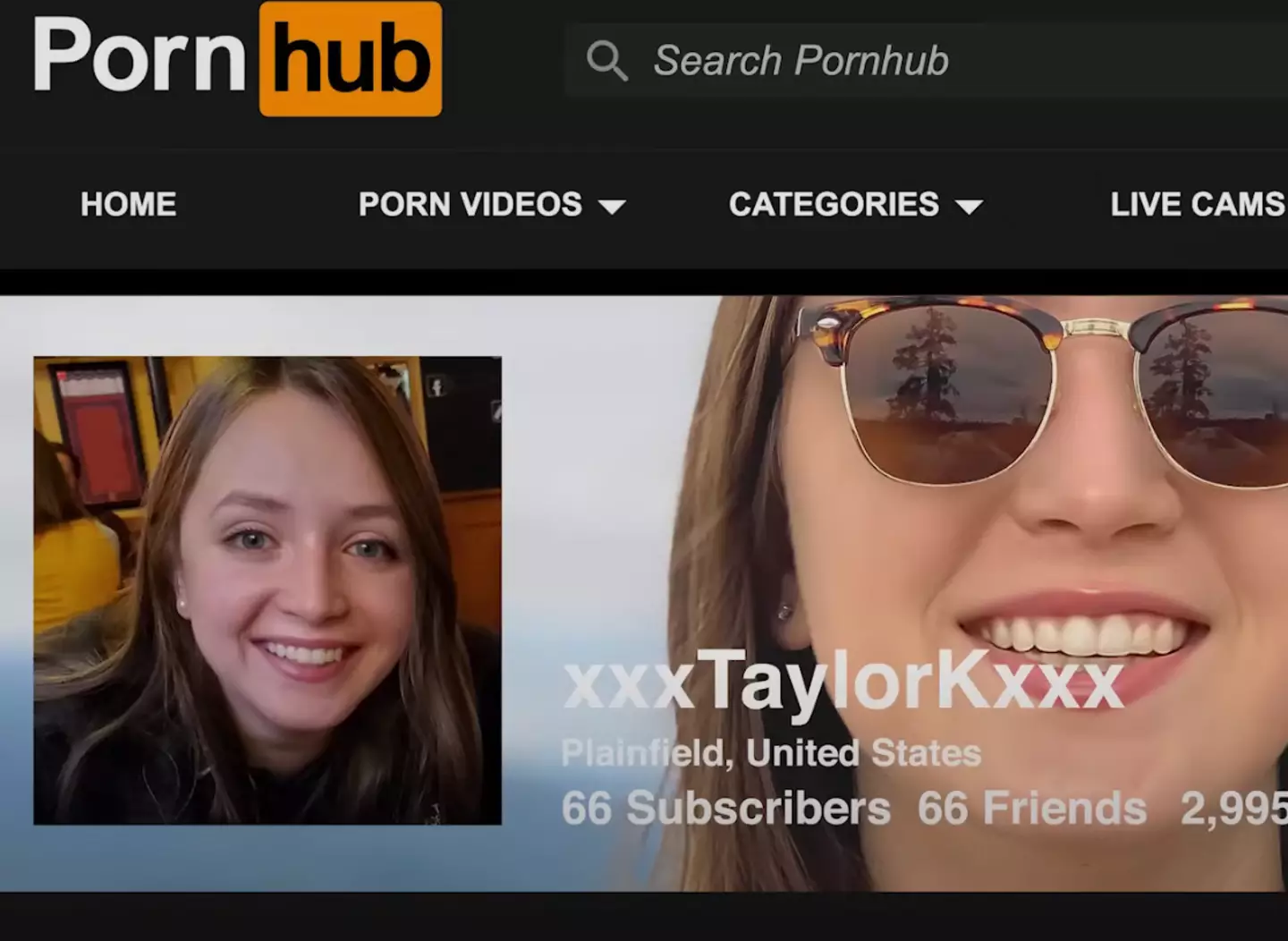

Horrified, the student quickly began looking further into the video and found that it had been posted on a website alongside her personal details, including her real name, school and hometown.

Advert

This meant that not only was Taylor's face out there, but that viewers could track her down if they wanted to.

Taylor had no idea who would create such a video of her, but seeing those additional details helped solidify her idea that a real person was responsible - this wasn't the random work of some bot.

"It showed me that whoever was responsible for this actively wanted to hurt me on a deeply personal level," Taylor said.

"It made me concerned for my physical safety as well, since so many strangers that were leaving these crude comments now knew who I was and where I was."

Advert

Already someone who worried a lot, Taylor said the deepfake only made her fear more intense.

"It put a lot more stress and fear on me, which I believe made my overall anxiety worse," she said.

However, having learned about what deepfakes are, Taylor learned that there is a good side to the technology.

Advert

She decided to use deepfakes to her advantage and tell her story in the film Another Body, in which a deepfake is used to replace her face with an actor's, keeping her anonymous.

"The opportunity to speak about the deepfakes anonymously allowed me to share what happened to me and discuss the dangers of deepfakes without fear of it following me throughout my personal life more than it already was," she explained.

"Anonymity gave me the option to speak about the experience on my own terms and mitigated the risk of [the perpetrator] retaliating in any way."

Taylor is hopeful other people could find the technology helpful in the same way, as she explained: "In the way that I was able to protect my identity using a deepfake, I think other people who want to speak up about their experiences but are afraid to could potentially have this an option."

Advert

Another Body shows how Taylor was desperate to get the video removed as quickly as possible, so she contacted the police with the impression they would 'immediately go figure out who was responsible for this and then arrest the person'.

Officers took note of the details of the incident, but since being deepfaked isn't technically a crime in her state of Connecticut, they had to classify the incident as Taylor being impersonated online.

Over the following days, Taylor called the department for updates, but to her dismay police had made little progress in finding out who was behind the videos.

The student decided to take matters into her own hands, and looked further into the deepfake to try and find out who might be responsible.

Before long, Taylor realized she wasn't the only person she knew who had been deepfaked this way.

Other women from Taylor's school had also had their likeness stolen for deepfake videos which had been shared online, so Taylor realized it must be someone they all knew.

"I never really thought it could be a stranger since I could tell the pictures were taken right from my social media, so I assumed it had to be someone who had access to those photos at that time," Taylor explained.

Working with one of the other women who had been deepfaked, Taylor narrowed down the list of potential perpetrators until she had just one name - an old friend identified by the name Mike.

Looking back now, Taylor has a 'high degree of confidence' that she's found the person responsible, though Mike has denied his involvement.

"I was (and still am) completely disgusted that he did this over and over again to different women in his life, indicating to me that he thought it was an acceptable way to treat women," she said.

"It showed me just how little he respected us and turned some of my initial fear about the deepfakes into anger."

Taylor reported Mike to the police, but there are no federal laws in the US criminalizing the creation or sharing of non-consensual deepfake porn. As a result, Taylor was eventually told there wasn't much that could be done.

"I was disappointed, but it had been such a long process at that point that I was not shocked anymore," Taylor recalled.

"I remember that evening after I found out that my case was being closed out, I did feel mostly apathetic towards everything else."

Taylor succeeded in having the videos taken down, but she believes the lack of repercussions against perpetrators could make deepfaking more common.

"As the technology advances, the deepfakes will probably become even more realistic looking (potentially making them that more dangerous)," she said.

Taylor isn't sure now if she'd have ever seen the deepfake if the link hadn't been sent directly to her by someone she knew, as she pointed out that one fake photo had been posted on the social media site 4chan around a year before the deepfake pages were made.

"The only reason I ever saw that post later on was because I was actively looking for more evidence," she explained.

Taylor continued: "Before the link got sent to me, I attributed all of the messages I was getting from random people to spam and probably would have kept doing that. It just never would have been on my radar that this could happen."

Taylor did find out, though, and many of the repercussions are long-lasting.

"It has caused me to be a little less trusting of people by default because it was someone I once had a close friendship with that likely made these pages," she said.

"I was not super active on social media before the deepfakes, but I am even more private now. I just don’t really have an interest anymore to share anything

on social media. Anytime I get a follow request from someone I don’t know, I wonder if something new could have been posted or if someone found something else that I don’t know about.

"I also do wonder if 'Mike' would ever do this again if, for example, he sees the documentary and figures out that he’s 'Mike'. I think I will have these

kinds of unanswered questions and nervousness from time to time for the

foreseeable future."

Taylor noted that because she managed to get her deepfakes taken down, she has been able to avoid 'the worst case long term effects that [she] was afraid of when [she] first saw the deepfakes'.

However, Taylor pointed out the US government still has 'a responsibility to protect people from non-consensual content'.

"[The government needs] to enact [or] amend regulations that keep up with the rapid growth of technology," she continued.

"I think more concrete legislation is a good (and necessary) step in making sure that this technology has regulation and companies have guidelines that they are required follow that protect the public."

Another Body is available to stream now on Prime Video and Apple TV.